A conceptual framework for categorization of ethical risks in machine learning and artificial intelligence

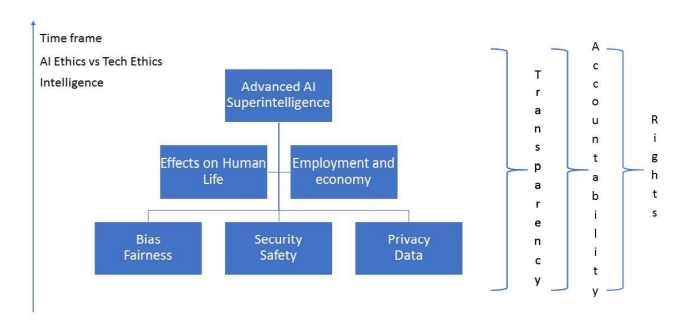

I have formed a conceptual framework for identifying and categorizing ethical risks related to machine learning and artificial intelligence. The starting point for this framework is to facilitate and clarify the big picture of the ethical risks. The framework contains the key categories of the ethical risks, most usable lenses for evaluating the larger development and scaling the field by selected variables.

The framework of the risks is based on the earlier literature and reports of AI ethics. The categorization - except for a few details - was formed for the interviews of this study, processed and introduced in chapter three, and based originally to the list of the ethical risks in AI by the World Economic Forum (World Economic Forum, 2016). In comparison to the formulation used in the interviews, it contains three changes: The threat of manipulation moved under the category of security and safety, and the ethical risk of algorithms and networks is combined with privacy and data centralization. The ethical concern of transparency is formulated to be one of the overall lenses for assessing and analyzing the ethical risks.

The principal idea of this formulation is to clarify the complicated reality of the ethical risks related to the AI/ML technologies by presenting three structures or dimensions related to the field:

1) The different levels of the risks: The ethical risks of AI/ML exist in different levels and only part of the risks is on the concrete level which is usually an indicator for the need of actions.

2) The lenses with an influence on all the risks: Some of the ethical requirements for AI/ML are more like lenses and affect the risks and concerns of all the levels. For example, the requirement for transparency will not change when moving to the more advanced levels of AI.

3) The risks can be positioned on various scales: The various scales can help with perceiving the levels of risks. For example, when the intelligence of the AI systems increases, the larger and societal risks might become more topical.